This presentation may be hazardous or unsettling

- Rapidly flickering images

- Vertiginous full screen zooms

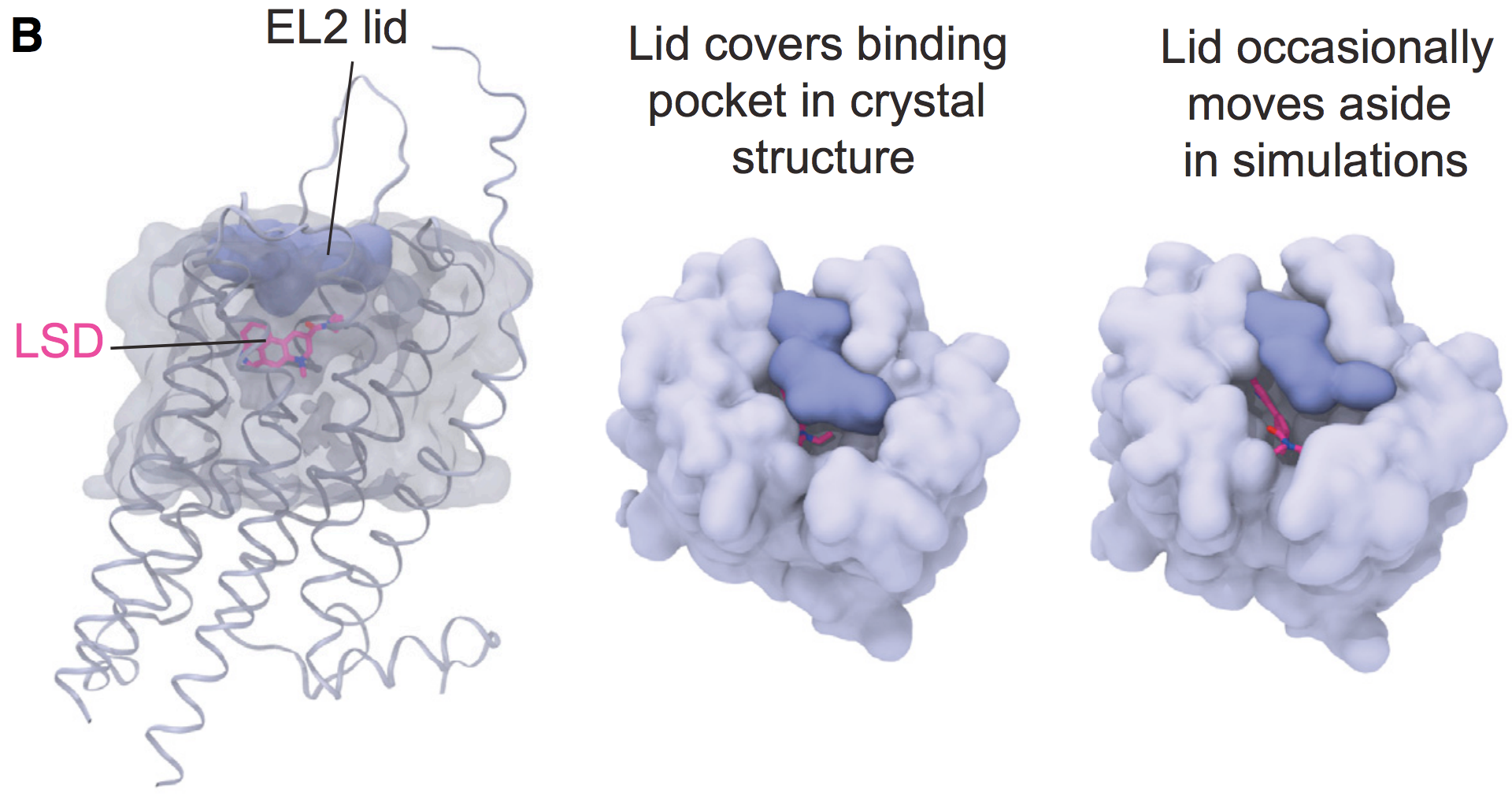

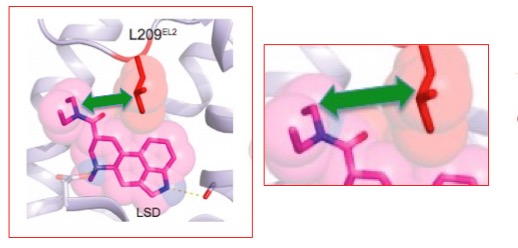

- Descriptions of drug use

- Suicide

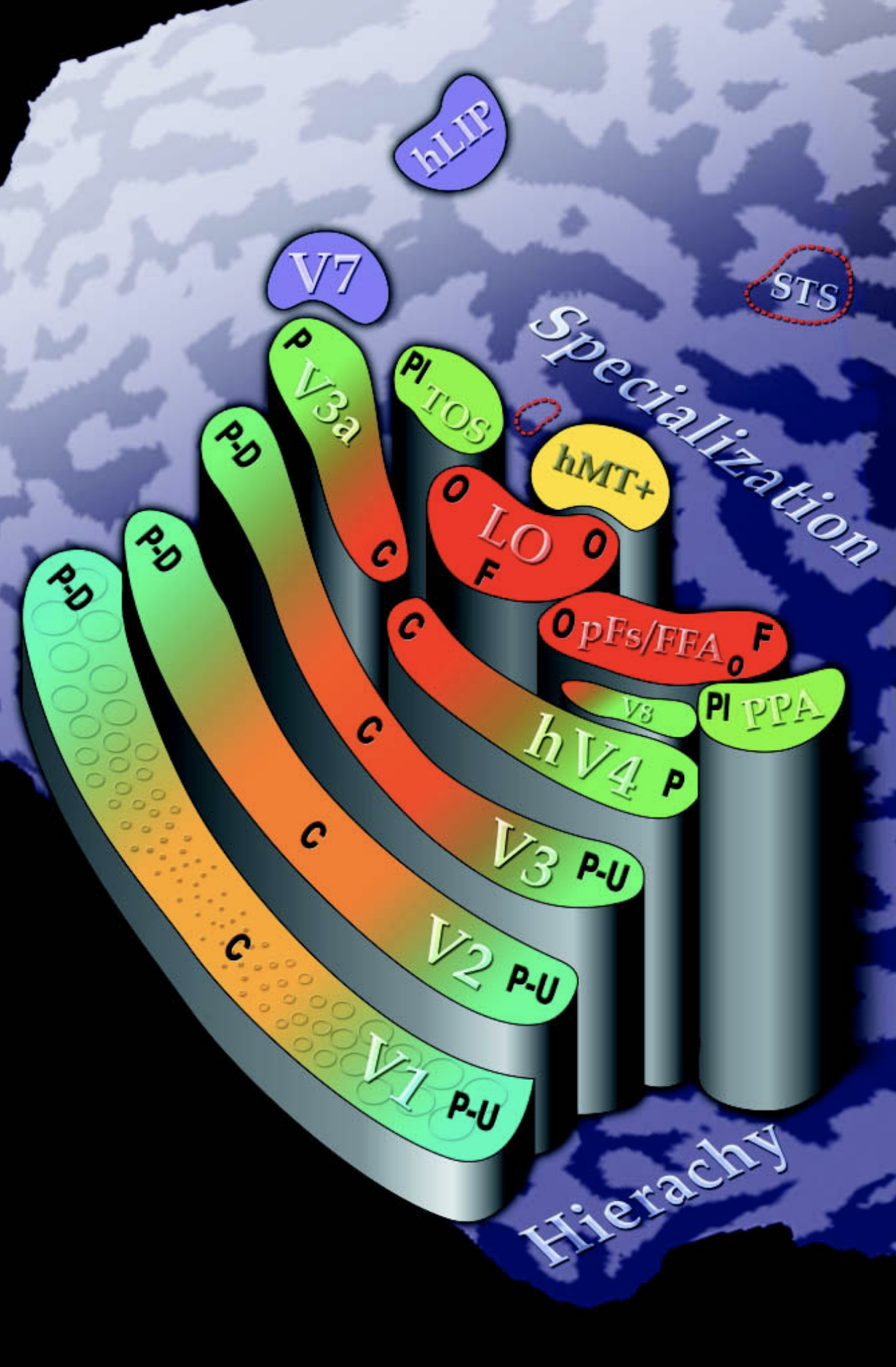

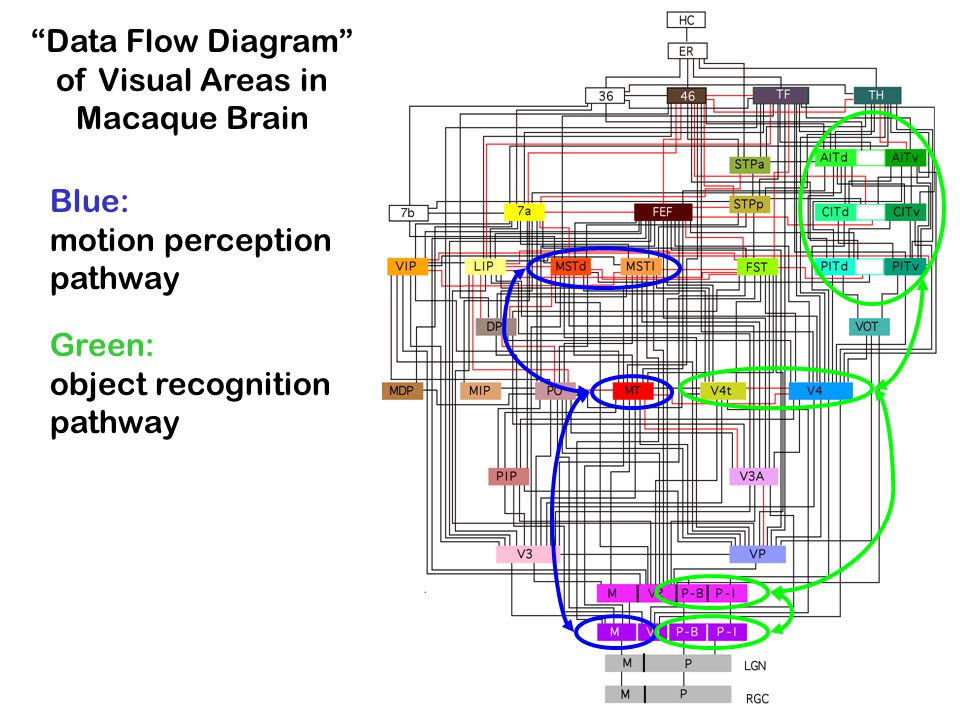

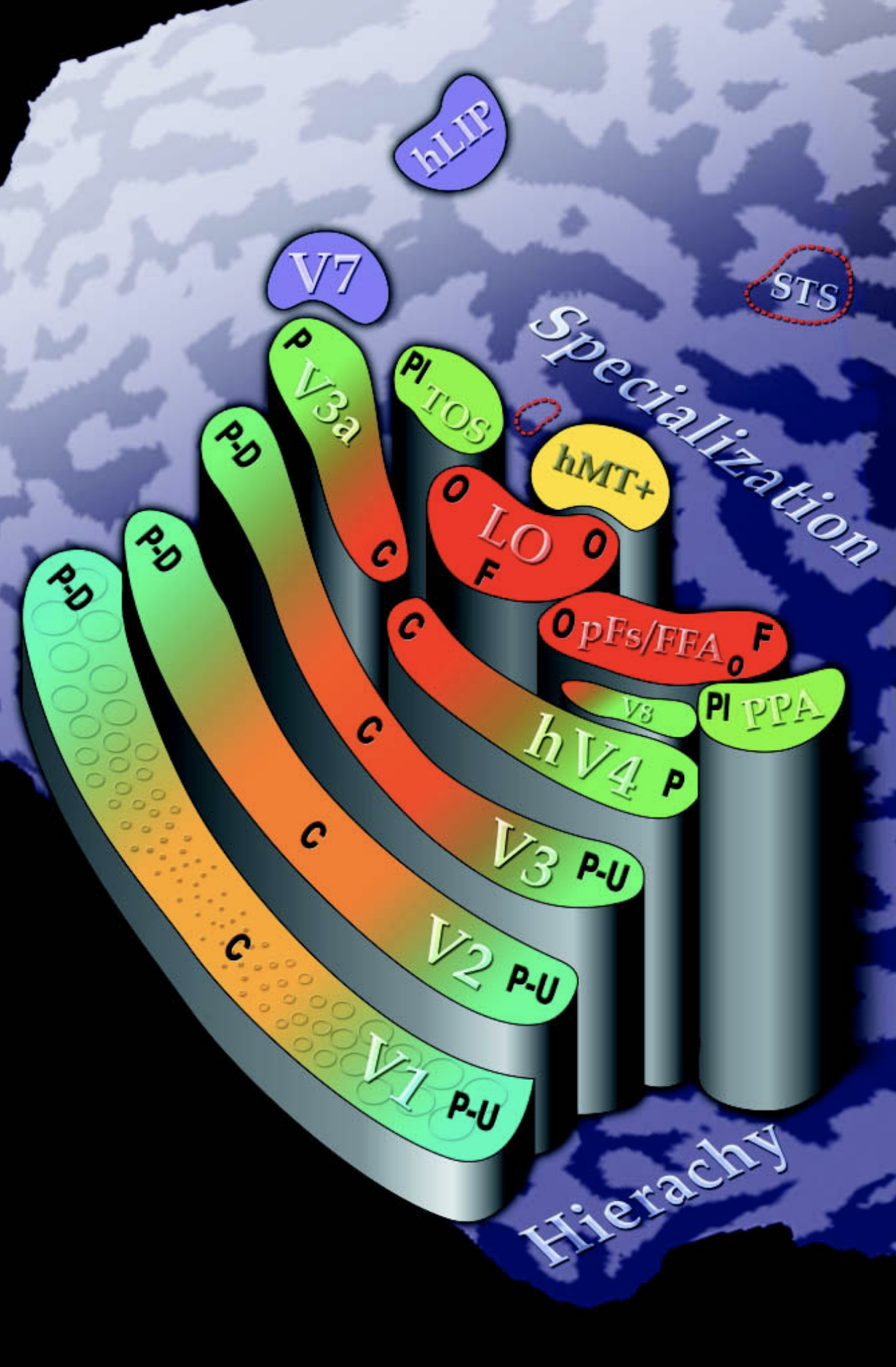

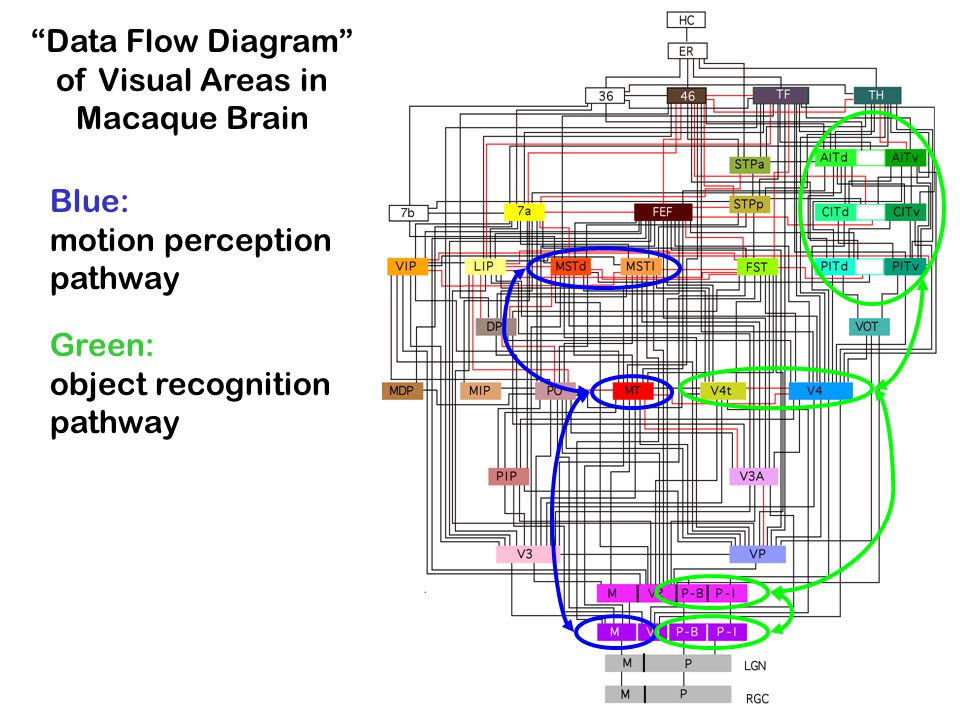

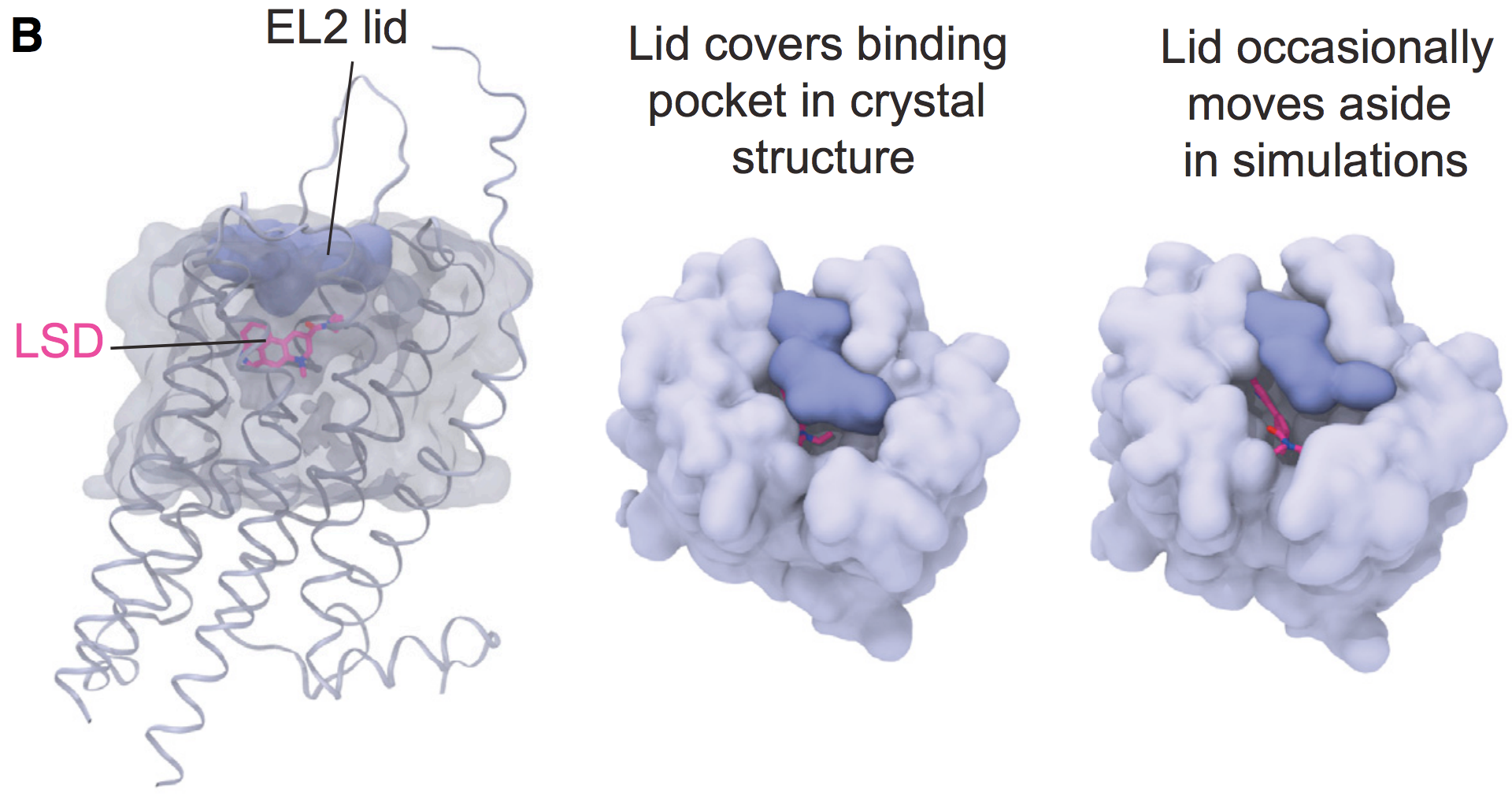

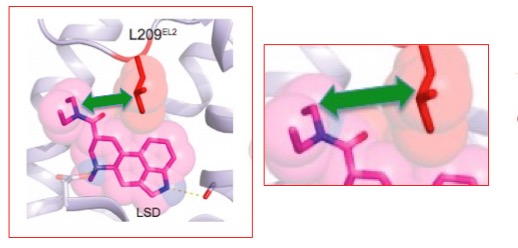

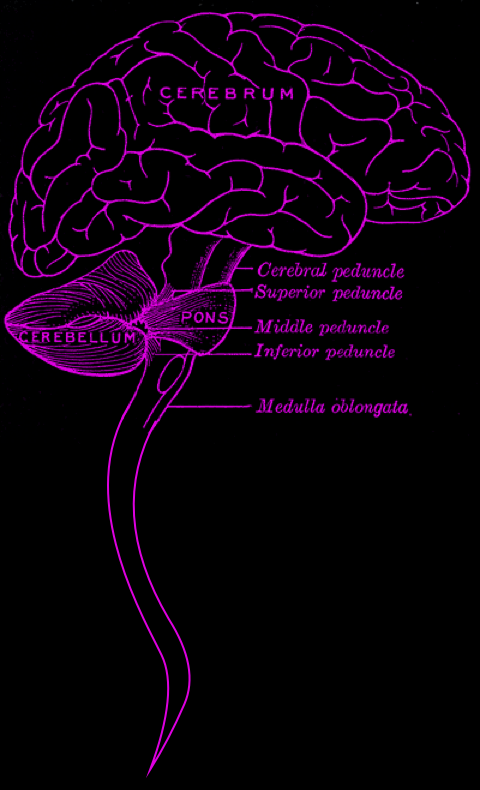

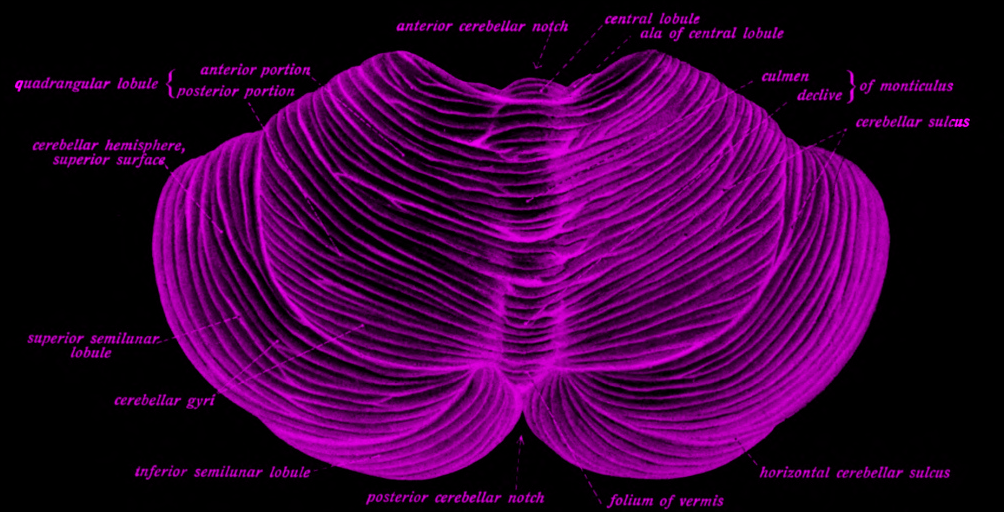

- Unusually detailed anatomical models

- Distorted figures suggesting human flesh

- Many eyes, all of them watching you